Open AI co-founder reckons AI training has hit a wall, forcing AI labs to train their models smarter not just bigger

By

Jeremy Laird

published

12 November 2024

Seems the LLMs have run out of arguments on Reddit to scrape…

Ilya Sutskever, co-founder of OpenAI, thinks existing approaches to scaling up large language models have plateaued. For significant future progress, AI labs will need to train smarter, not just bigger, and LLMs will need to think a little bit longer.

Speaking to Reuters, Sutskever explained that the pre-training phase of scaling up large language models, such as ChatGPT, is reaching its limits. Pre-training is the initial phase that processes huge quantities of uncategorized data to build language patterns and structures within the model.

Until recently, adding scale, in other words increasing the amount of data available for training, was enough to produce a more powerful and capable model. But that’s not the case any longer, instead exactly what you train the model on and how is more important.

You may like

-

China’s DeepSeek chatbot reportedly gets much more done with fewer GPUs but Nvidia still thinks it’s ‘excellent’ news

-

Forget DeepSeek R1, apparently it’s now Alibaba that has the most powerful, the cheapest, the most everything-est chatbot

“The 2010s were the age of scaling, now we’re back in the age of wonder and discovery once again. Everyone is looking for the next thing,” Sutskever reckons, “scaling the right thing matters more now than ever.”

The backdrop here is the increasingly apparent problems AI labs are having making major advances on models in and around the power and performance of ChatGPT 4.0.

The short version of this narrative is that everyone now has access to the same or at least similar easily accessible training data through various online sources. It’s no longer possible to get an edge simply by throwing more raw data at the problem. So, in very simple terms, training smarter not just bigger is what will now give AI outfits an edge.

Another enabler for LLM performance will be at the other end of the process when the models are fully trained and accessed by users, the stage known as inferencing.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Here, the idea is to use a multi-step approach to solving problems and queries in which the model can feed back into itself, leading to more human-like reasoning and decision-making.

“It turned out that having a bot think for just 20 seconds in a hand of poker got the same performance boost as scaling up the model by 100,000x and training it for 100,000 times longer,” Noam Brown, an OpenAI researcher who worked on the latest o1 LLM says.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

In other words, having bots think longer rather than just spew out the first thing that comes to mind can deliver better results. If the latter proves a productive approach, the AI hardware industry could shift away from massive training clusters towards banks of GPUs focussed on improved inferencing.

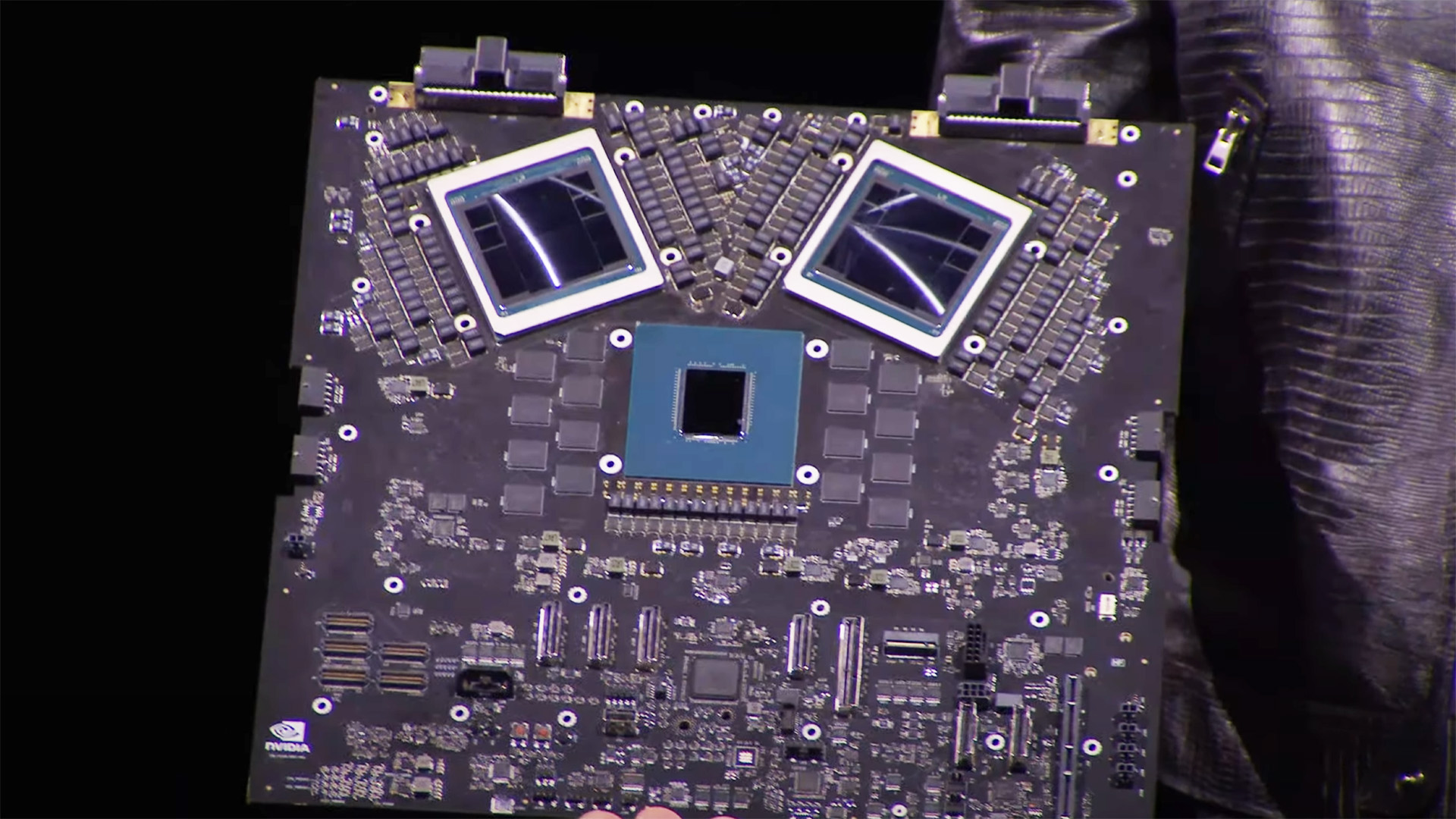

Of course, either way, Nvidia is likely to be ready to take everyone’s money. The increase in demand for AI GPUs for inferencing is indeed something Nvidia CEO Jensen Huang recently noted.

“We’ve now discovered a second scaling law, and this is the scaling law at a time of inference. All of these factors have led to the demand for Blackwell [Nvidia’s next-gen GPU architecture] being incredibly high,” Huang said recently.

How long it will take for a generation of cleverer bots to appear thanks to these methods isn’t clear. But the effort will probably show up in Nvidia’s bank balance soon enough.

Jeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.

China’s DeepSeek chatbot reportedly gets much more done with fewer GPUs but Nvidia still thinks it’s ‘excellent’ news

Forget DeepSeek R1, apparently it’s now Alibaba that has the most powerful, the cheapest, the most everything-est chatbot

In a mere decade ‘everyone on Earth will be capable of accomplishing more than the most impactful person can today’ says OpenAI boss Sam Altman

OpenAI CEO Sam Altman says his company is ‘out of GPUs’ to which I reply ‘welcome to the party, pal’

‘AI’s Sputnik moment’: China-based DeepSeek’s open-source models may be a real threat to the dominance of OpenAI, Meta, and Nvidia

New research says ChatGPT likely consumes ’10 times less’ energy than we initially thought, making it about the same as Google search

‘Humans still surpass machines’: Roblox has been using a machine learning voice chat moderation system for a year, but in some cases you just can’t beat real people

ChatGPT faces legal complaint after a user inputted their own name and found it accused them of made-up crimes

I’m creeped out by this trailer for a generative AI game about people using an AI-powered app to solve violent crimes in the year 2028 that somehow isn’t a cautionary tale

Microsoft co-authored paper suggests the regular use of gen-AI can leave users with a ‘diminished skill for independent problem-solving’ and at least one AI model seems to agree

Microsoft unveils Copilot for Gaming, an AI-powered ‘ultimate gaming sidekick’ that will let you talk to your console so you don’t have to talk to your friends

8 months into their strike, videogame voice actors say the industry’s latest proposal is ‘filled with alarming loopholes that will leave our members vulnerable to AI abuse’

Another round of Baldur’s Gate 3 unearthing reveals Minthara can end up living in a sewer, an unused beach ending, and more

Ark devs distance themselves from AI-generated trailer: ‘we did not know that they were doing it’

An FPS studio pulled its game from Steam after it got caught linking to malware disguised as a demo, but the dev insists it was actually the victim of a labyrinthine conspiracy

A beta of backyard FPS Neighbors: Suburban Warfare is out now, and the balance discussion is hysterical: nerf trash can lids and children

The specter of a GTA 6 delay haunts the games industry: ‘Some companies are going to tank’ if they guess wrong, says analyst

Wreckfest 2 has hit early access for your car-obliterating combat racing enjoyment

‘Humans still surpass machines’: Roblox has been using a machine learning voice chat moderation system for a year, but in some cases you just can’t beat real people

ChatGPT faces legal complaint after a user inputted their own name and found it accused them of made-up crimes

Dragon Age: The Veilguard gets a crumb of support as EA offers free weapon skins to coincide with a 50% discount

-

1Best Steam Deck accessories in Australia for 2025: Our favorite docks, powerbanks and gamepads

-

2Best graphics card for laptops in 2025: the mobile GPUs I’d want in my next gaming laptop

-

3Best mini PCs in 2025: The compact computers I love the most

-

4Best 14-inch gaming laptop in 2025: The top compact gaming laptops I’ve held in these hands

-

5Best Mini-ITX motherboards in 2025: My pick from all the mini mobo marvels I’ve tested

-

1Logitech G PowerPlay 2 review

-

2Colorful iGame RTX 5070 Ti Vulcan OC review

-

3Atomfall review: A muddled sci-fi misadventure in need of a stronger identity

-

4Acer Predator Z57 review

-

5Mackie CR3.5BT + CR8SBT subwoofer review

Source: https://www.pcgamer.com/